Table of Contents

Ronen Lago, veteran cybersecurity leader and board advisor, shares his perspective on the growing risks of AI‑driven fraud and what CISOs can do to counter them.

Ronen Lago, veteran cybersecurity leader and board advisor, shares his perspective on the growing risks of AI‑driven fraud and what CISOs can do to counter them.

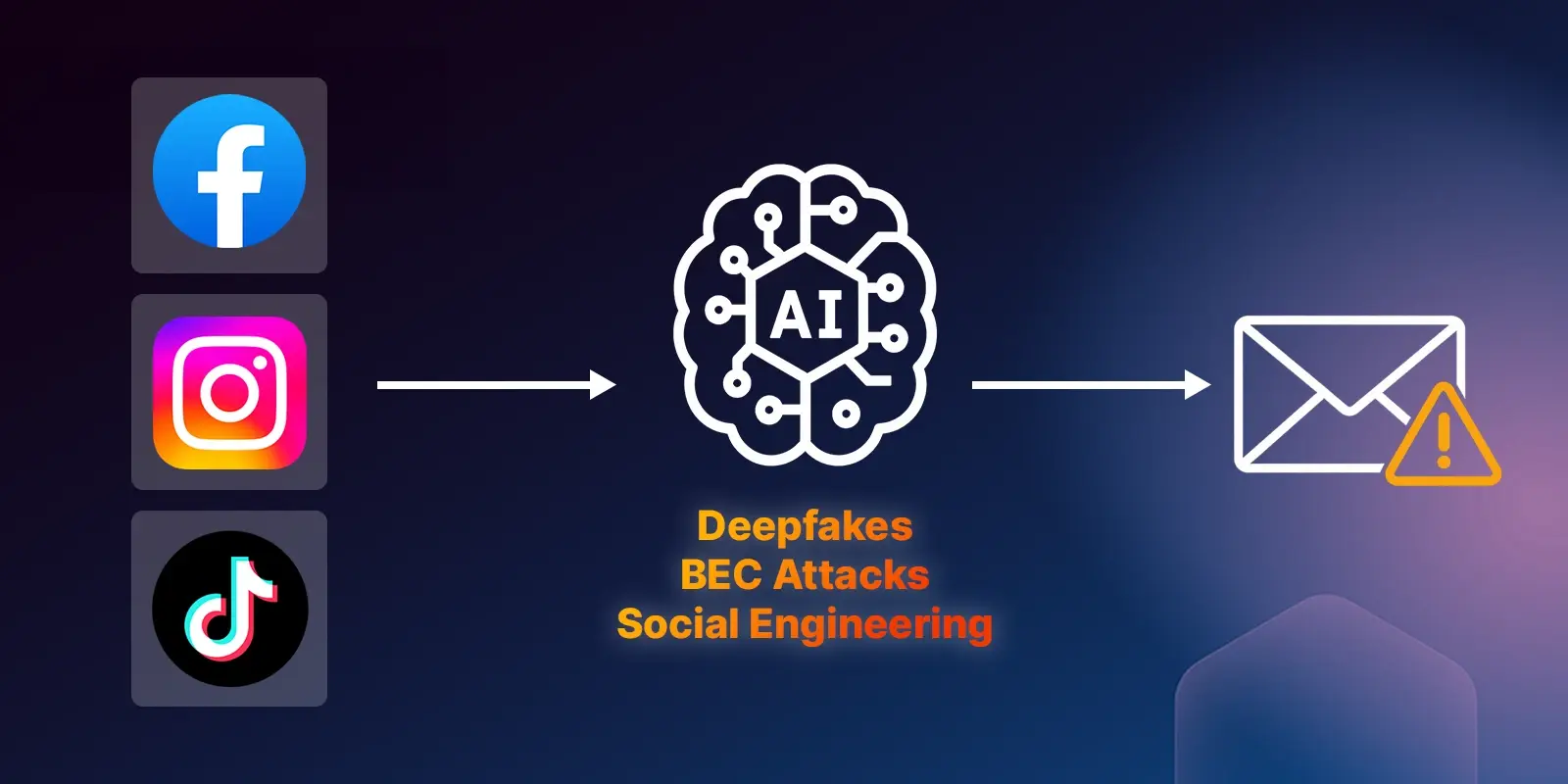

I’ve been tracking cybercrime for decades, but I’ve never seen the velocity of innovation from bad actors match what we’re experiencing today. A recent report from the Israel Internet Association (ISOC-IL) on “Algorithmic Scams” has uncovered hundreds of incidents where generative AI was leveraged to produce highly realistic deepfake video and image ads on platforms like Facebook, Instagram, and TikTok, often featuring prominent public figures encouraging viewers to “invest” in fraudulent schemes.

What’s remarkable (and deeply concerning) is that these campaigns don’t stop at social media. The same criminals recycle the personas, scripts, and AI‑generated content into spear‑phishing emails, business email compromise (BEC) attempts, and voice scams targeting both consumers and enterprises.

The Consumer Funnel Is Now an Enterprise Threat Vector

What starts as a consumer scam on Instagram can easily morph into a tailored, CEO‑impersonation email hitting your CFO’s inbox.

ISOC‑IL researchers mapped a four‑step fraud funnel that looks chillingly similar to the kill chains we defend against in enterprise phishing:

- Micro‑targeted ad

Delivered via sponsored posts using stolen images, AI‑generated deepfake video, and legitimate ad‑buying accounts. - Cloaked landing page

Legitimate‑looking investment or banking site, often with SSL and perfect grammar. - Social engineering

Phone or chat follow‑up to extract more funds. - Reinvestment cycle

Victims are persuaded to “reinvest” until accounts are drained.

We’ve already seen cases where initial targeting data from social platforms fuels precision phishing inside corporate environments.

Why Legacy Filters (and Ad Review) Fail Alike

The weaknesses that let deepfake ads bypass review are the same weaknesses that let targeted phishing emails slip past your filters.

Traditional email security tools rely heavily on static rules, domain reputation, and pattern‑matching. We know they miss well‑crafted spear‑phishing.

Social platforms face the same weakness. ISOC‑IL found that scammers use Dynamic Ads and A/B testing to cloak their true content from automated review systems, showing benign ads to moderators and fraudulent ones to targeted victims.

Example of an AI‑generated deepfake investment ad disguised as a news interview. Targeted victims see the fake investment pitch (right), while moderators are shown a harmless product ad (left).

Source: ISOC‑IL “Algorithmic Scams” report.

This is conceptually identical to phishing redirect chains in email: what the security system “sees” is not what the end user experiences.

And now, attackers are pairing these cloaking tricks with another dangerous upgrade, AI‑generated deepfake media that can convincingly impersonate people you know and trust.

Today’s fraudsters routinely embed AI‑generated deepfake media, from fabricated executive “video interviews” to cloned voices, into their scams. Detecting these requires advanced biometric analysis to catch facial inconsistencies, lip‑sync errors, and synthetic speech patterns. Most ad‑review and email‑security stacks still can’t reliably flag synthetic personas in motion or voice, leaving a critical blind spot.

Defense in Depth - Network, Endpoint, and Brand Guardianship

In my own practice, I’m advising CISOs to treat this as a cross‑channel threat. Email, web, and social vectors are now part of one attack continuum.

Defending against AI‑powered scams means connecting the dots across email, social media, and the web, because the attackers already do.

My practical steps include:

- Continuous monitoring for brand abuse, not just on the open web but in paid social ads

- Machine‑learning–driven detection that evaluates behavioral signals, not just content

- User training that covers deepfake video and audio recognition and teaches staff to question unsolicited financial opportunities, regardless of channel.

- Collaboration between security, marketing, and communications teams to share intel on brand impersonation campaigns spotted online

My Take for Fellow CISOs

The ISOC‑IL “Algorithmic Scams” report is a wake‑up call for CISOs, not just for consumers, because the same techniques are being repurposed for corporate targets.

I believe we are witnessing the industrialization of AI‑powered fraud. The ISOC‑IL “Algorithmic Scams” report is a wake‑up call. Not just for consumers, but for enterprise security leaders who need to understand how these same techniques are being repurposed for corporate targets.

If you’re a CISO, ask yourself:

- Are your defenses tuned for the generative‑AI era?

- Can your SOC correlate threats that begin outside email?

- Do you have a playbook for responding to brand impersonation and deepfake‑driven impersonation attempts on social platforms?

I’d love to hear from fellow CISOs. What’s working in your organization? Join the conversation and share your thoughts in the comments on my LinkedIn.

Ronen Lago has more than 20 years of experience designing and delivering advanced intelligence systems and cybersecurity solutions. As a former CTO and Head of R&D for Tier‑1 enterprises, he has led global, multidisciplinary teams at Daimler AG, Lockheed Martin, Israeli Aerospace Industries, and Motorola. His expertise spans cybersecurity, big data analytics, IoT, critical infrastructure networks (SCADA/smart manufacturing), machine learning, and AI applications. Ronen is a veteran of an elite military intelligence unit and holds an M.Sc. in Electrical Engineering and Business Management.

Ronen Lago has more than 20 years of experience designing and delivering advanced intelligence systems and cybersecurity solutions. As a former CTO and Head of R&D for Tier‑1 enterprises, he has led global, multidisciplinary teams at Daimler AG, Lockheed Martin, Israeli Aerospace Industries, and Motorola. His expertise spans cybersecurity, big data analytics, IoT, critical infrastructure networks (SCADA/smart manufacturing), machine learning, and AI applications. Ronen is a veteran of an elite military intelligence unit and holds an M.Sc. in Electrical Engineering and Business Management.

Explore More Articles

Say goodbye to Phishing, BEC, and QR code attacks. Our Adaptive AI automatically learns and evolves to keep your employees safe from email attacks.

/Concentrix%20Case%20Study.webp?width=568&height=326&name=Concentrix%20Case%20Study.webp)

.webp?width=100&height=100&name=PXL_20220517_081122781%20(1).webp)