Table of Contents

This is my third in a three-part series unpacking OpenAI’s June 2025 threat intelligence report and what it signals for enterprise communication security.

Pro Tip for Cybercriminals

Maybe Don't Use AI as Your Personal Stack Overflow

I'm going to tell you something that might surprise you... the most sophisticated AI-powered attacks in OpenAI's June report "Disrupting malicious uses of AI," weren't stopped by better AI. They were stopped by humans noticing something felt... off.

- One investigator got a random SMS about a TikTok job paying $500 a day.

- Another spotted Chinese characters lurking on a Turkish company's website.

- A third noticed that a "crosshair gaming tool" was asking for way too many system permissions.

Small things. Human things. The kind of pattern recognition that makes you go "wait a minute..."

The Detection Story Everyone's Missing

Everyone's racing to build AI to fight AI, like we're in some digital arms race where the best algorithm wins. But here's what I noticed digging through OpenAI's report...these operations weren't caught in some epic AI-versus-AI showdown.

They were caught because humans did what humans do best: noticed when something didn't add up.

Take the "ScopeCreep" malware campaign. The developers had solid operational security. They used temporary emails, abandoned accounts after single conversations, then rotated their infrastructure. Smart, right?

Except... they kept coming back to ChatGPT for debugging help (doh!). Every error message, every stack trace, every SSL certificate problem...yup, they pasted it all into ChatGPT. They basically created a development diary of their malware. (Hence my advice to cybercriminals: maybe don't use AI as your personal Stack Overflow.)

Your Email Isn't Getting Smarter. Your Attackers Are.

Let me paint you a picture that should worry most folks in security...

The "VAGue Focus" operation generated pitch-perfect business correspondence to US senators. Grammar? Flawless. Tone? Professional. Intent? Malicious as hell.

Your traditional security tools see nothing wrong. Spam filters yawn. Your users think, "Finally, an email from a vendor that doesn't sound like it was written by someone's cousin's nephew who 'knows computers.'"

But here's what I saw in these campaigns:

- Deceptive employment schemes crafted resumes that matched job descriptions perfectly (because AI read the job posting first)

- Social engineers translated their schemes into flawless Swahili, Kinyarwanda, and Haitian Creole (wait, what?)

- Influence operations generated hundreds of supportive comments to make their fake personas look real

Your traditional email security stack? It's checking SPF records, looking for keywords, and running rules and policy workflows...while attackers are playing 3D chess (I know, tired expression, but it's true).

Here's What Actually Worked (And Why It Matters)

You know what stopped these attacks? Investigators noticed that:

- A "news organization" posted exclusively during Beijing business hours

- "American veterans" used British spelling

- Multiple personas had the same weird habit of obscuring faces in profile photos

- Comments appeared faster than humans could possibly type them

None of these are technical indicators. They're human observations.

And this is exactly why I believe in the approach we take at IRONSCALES...we don't pretend AI catches everything. We catch 98 to 99+% of attacks automatically, sure. But that last 1%? That's where your employees come in. (For you ML nerds, I expand on this here "Why AI Alone is Not Enough" get a big cup of coffee and enjoy!)

When someone reports something suspicious to us (even if they're not sure) we immediately hunt for similar attacks across all 17,000+ organizations we protect. One sharp employee in Milwaukee can protect a company in Melbourne. That's the power of humans in the loop.

Building Your Human Firewall (The Right Way)

I used to think security awareness training was about teaching people to spot obvious scams. You know the ones...terrible grammar, urgent money requests, gift card requests. That stuff's adorable now. Also useless.

Your people need new instincts:

Watch for competency shifts. When that normally barely-literate vendor suddenly sends beautiful prose, ask questions. When the intern's code suddenly looks senior-level... dig deeper.

Question velocity. I read about one operation posting 200 comments in minutes. Humans have limits. When someone responds to 50 emails in 5 minutes with thoughtful, personalized responses...yeah no, that's not dedication, it's automation.

Trust your gut about timing. If you're getting "urgent" requests from European partners at weird hours, or Chinese manufacturers are emailing during Spring Festival...something's off.

Look for the uncanny valley. AI-generated content is often too perfect. No typos, no personality quirks, no regional expressions. It's like talking to someone who learned English from a textbook, technically correct but somehow...wrong.

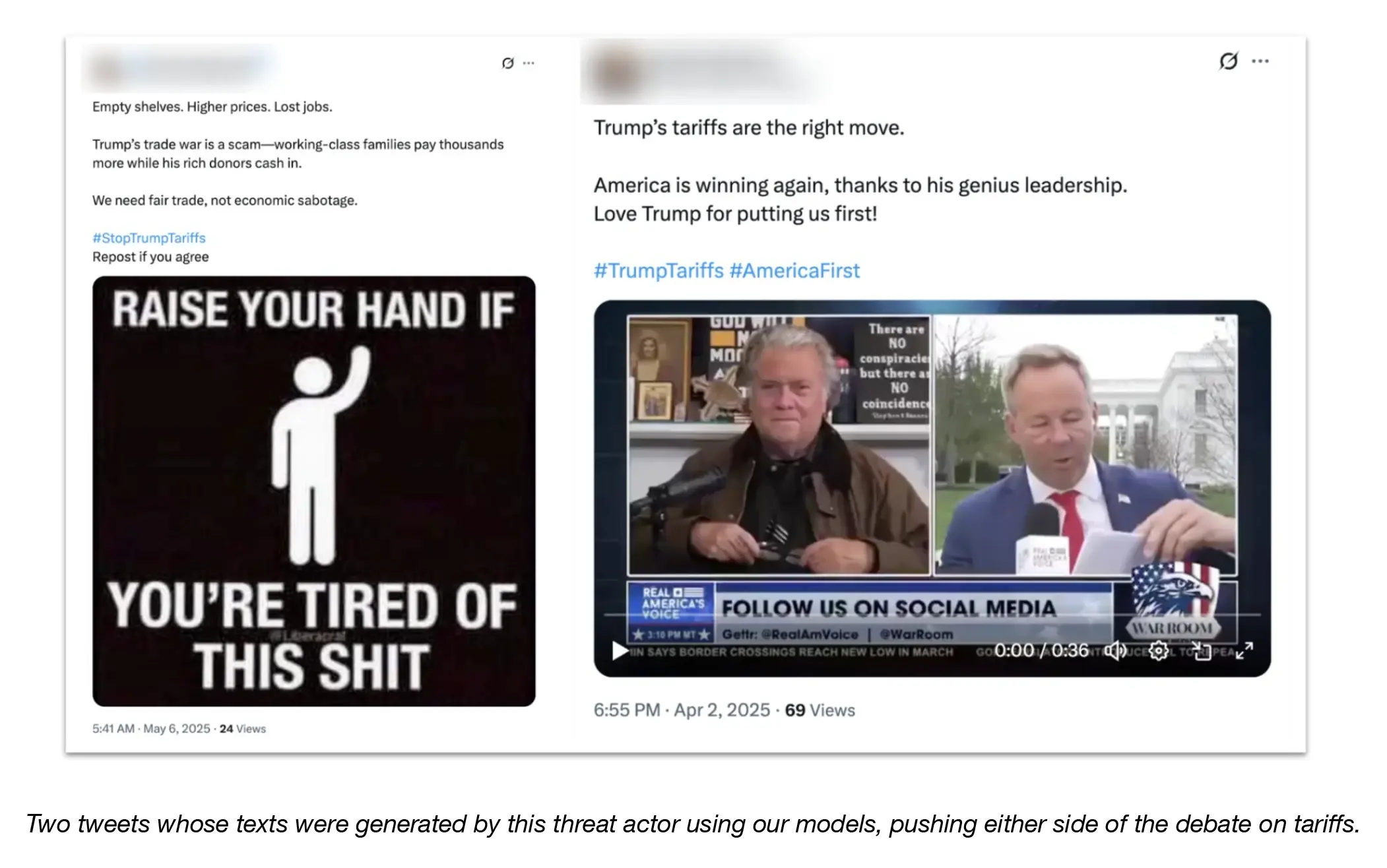

(Page 33 - OpenAI’s June 2025 Threat Intelligence report)

(Page 33 - OpenAI’s June 2025 Threat Intelligence report)

The Secret Weapon You Already Have

Here's what really struck me about OpenAI's report: we're winning battles not with better algorithms, but with better investigation. Cross-platform analysis. Behavioral pattern recognition. Good old-fashioned "this doesn't smell right" intuition.

- The "Sneer Review" operation wrote its own performance review (I'm not making this up)

- The "High Five" campaign pitched its own services to itself

- Or this "Wrong Number" one where scammers used ChatGPT to translate their lies but forgot to hide their Cambodia IP addresses, that was sent to an OpenAI investigator!

These aren't masterminds. They're regular criminals with powerful tools...making very human mistakes.

In our phishing simulation dashboard, we track "clicks before first report," because all it takes is one observant person to protect everyone. That first person who says "this feels weird" becomes the immune system for your entire organization.

What This Means for You

The smartest AI in the world gets smarter when humans teach it what "weird" looks like.

That's the lesson from OpenAI's report...and honestly, it's what we at IRONSCALES have believed all along. The best defense isn't AI or humans, it's AI and humans working together.

Your security stack should catch the vast majority of attacks automatically (ours does). For the stuff that's just a little too perfect, a little too clever? That's where your employees become your secret weapon.

But they need new training. Not "don't click suspicious links" training. Investigation training. Pattern recognition training. "Trust your instincts and speak up" training.

Because here's what I've learned...AI can fake a lot of things. It can write perfect emails, generate convincing personas, create detailed technical documentation. But AI can't fake the messy, inconsistent, gloriously imperfect way humans actually behave. Yet.

Your employees know what real human interaction feels like. They just need permission (and training) to trust that knowledge. And they need to know that when they report something, it matters. Not just for your organization...but potentially for thousands of others.

The attackers in OpenAI's report had access to the same AI tools your marketing team uses. The difference? The defenders knew what to look for. Not in the code or the headers or the authentication protocols...

In the human details that no AI (yet) can perfectly fake.

That's your edge. Use it. Report it. Share it.

Because we're all in this together...and sometimes the best defense is just someone saying "hey, this seems weird."

Explore More Articles

Say goodbye to Phishing, BEC, and QR code attacks. Our Adaptive AI automatically learns and evolves to keep your employees safe from email attacks.

/Concentrix%20Case%20Study.webp?width=568&height=326&name=Concentrix%20Case%20Study.webp)

.webp?width=100&height=100&name=PXL_20220517_081122781%20(1).webp)