Table of Contents

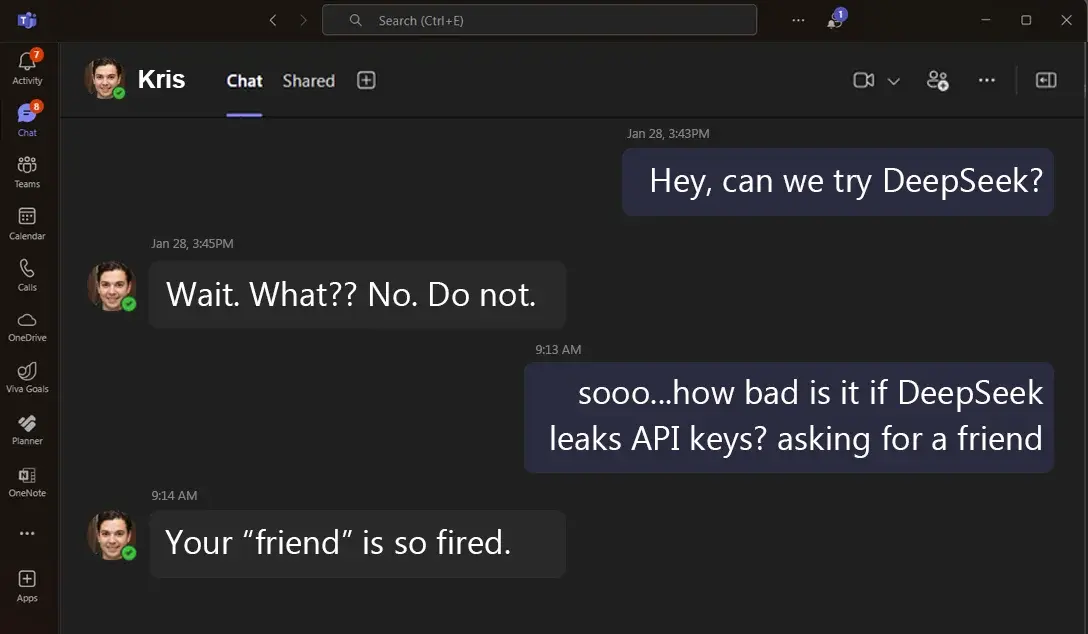

If you read my last blog, you know I already had concerns about DeepSeek. My concerns were mainly around privacy risks, data storage in China, and the lack of transparency in its policies. But since then, new research from Cisco’s AI security team, in collaboration with the University of Pennsylvania, has revealed just how bad the security situation really is.

In a detailed analysis, the researchers tested DeepSeek-R1 using 50 known jailbreak techniques, and every single one worked. Not one was blocked. That’s a 100% failure rate against attacks that most AI companies have spent years defending against.

Combine that with the fact that DeepSeek also left its own database exposed on the internet, leaking chat logs, API keys, and internal system data, and you start to see a pattern. Their AI model isn’t just vulnerable, it’s practically designed for abuse.

Look, AI tools evolve. Maybe DeepSeek tightens up its security in the future. But as it stands today, it’s clear that organizations need to tread carefully when evaluating AI tools (especially one this vulnerable).

DeepSeek is Wide Open to Abuse

Most AI models can be jailbroken if you try hard enough. But with DeepSeek? You don’t even have to try.

The researchers from Robust Intelligence and the University of Pennsylvania ran 50 standard jailbreak tests on DeepSeek. Every single one succeeded.

It didn’t just fail, it failed spectacularly. It let them generate:

- Disinformation & propaganda

- Cybercrime & fraud playbooks

- Malware & exploit code

- Illegal content

This isn’t a case of “Oh, someone found a clever way around the filters”…there are no real filters to bypass.

Compare this to OpenAI or Anthropic, which have teams constantly red-teaming their models, testing for vulnerabilities, and rolling out updates to close security gaps. DeepSeek, on the other hand, seems to have skipped that step entirely.

For bad actors, this is gold. They now have a fully jailbreakable AI model that can generate harmful content on demand, with zero friction. If you're running a security team, this is something you should be worried about.

DeepSeek Can’t Even Secure Itself

As if the jailbreak problem wasn’t bad enough, DeepSeek also left its own systems exposed.

Security researchers at Wiz found an open database sitting on the internet. No password, no security, just wide open. It contained:

- User chat logs (who knows what was in them?)

- API keys (which could let attackers hijack accounts)

- System logs (basically a roadmap for hacking their infrastructure)

To make matters worse, this wasn’t some obscure vulnerability that took weeks of probing to uncover. The researchers found DeepSeek’s open database almost immediately, without needing any advanced techniques. It was just… sitting there. No authentication, no firewall protections, nothing.

When they discovered the breach, they did what responsible security researchers do, they tried to alert DeepSeek. But here’s where things got even more concerning. There was no clear way to report the issue. No security contact, no bug bounty program, no established disclosure process. So, the researchers resorted to guessing email addresses and spamming DeepSeek employees on LinkedIn, hoping someone would notice.

It worked (sort of). Within about 30 minutes, DeepSeek locked down the database. But they never publicly acknowledged the breach, never responded to requests for comment, and never clarified whether user data had been accessed by bad actors before it was secured. If this had happened with a more established AI company, there would have been an incident response, transparency reports, and security commitments. DeepSeek? Just radio silence.

Mistakes happen. But this isn’t a small mistake, this is “we’re not even thinking about security” levels of negligence.

AI Security Isn’t Optional, DeepSeek Proves It

DeepSeek’s security failures highlight a larger issue, AI models are being deployed at breathtaking speed, often without proper security measures in place.

What we’re seeing with DeepSeek is a pattern that will repeat:

- New AI models launch quickly to stay competitive

- Security researchers expose major vulnerabilities

- Companies scramble to patch issues after launch, rather than building security in from the start

This is why AI adoption in the enterprise must be cautious, not reactive.

AI security isn’t just about privacy. It’s about controlling the risks of powerful technology before they become a problem. And right now, DeepSeek is a problem.

How Security Leaders Should Approach DeepSeek (or Any AI Tool)

In our previous blog, we laid out a step-by-step security-first approach to evaluating DeepSeek. Given these new risks, organizations must be even more thorough when considering AI adoption.

If your company is evaluating DeepSeek, here’s what to do right now:

1. Contain & Monitor Usage- Block access to DeepSeek’s web services (if not already done)

- Disable unauthorized API access, especially if employees have experimented with it

- Monitor for unusual traffic related to DeepSeek, attackers may already be exploiting leaked API keys

- Conduct an internal risk review to determine if DeepSeek aligns with your security policies

- Check compliance with data protection laws (GDPR, CCPA) given its Chinese data storage policies

- Assess AI governance policies (is your organization prepared for the risks of jailbreakable models?)

- DeepSeek will likely improve over time, but its current state demands scrutiny

- AI is an evolving landscape, and organizations must continuously reassess risk

- Encourage responsible AI adoption, balancing innovation with real security considerations

Security Must Come First

At this point, the risks with DeepSeek outweigh the benefits. That might change. Maybe they’ll fix the jailbreak issues. Maybe they’ll take security more seriously.

But right now, bad actors don’t need to “hack” DeepSeek to use it for cybercrime, it’s already built for it.

If you’re evaluating AI tools for your organization, be curious, but be cautious. Security needs to come first, because AI vendors clearly aren’t always thinking about it.

Further Reading and Resources

Tree of Attacks: Jailbreaking Black-Box LLMs Automatically (Cornell)

Explore More Articles

Say goodbye to Phishing, BEC, and QR code attacks. Our Adaptive AI automatically learns and evolves to keep your employees safe from email attacks.

/Concentrix%20Case%20Study.webp?width=568&height=326&name=Concentrix%20Case%20Study.webp)

.webp?width=100&height=100&name=PXL_20220517_081122781%20(1).webp)