Table of Contents

In recent times, the world of cybersecurity has been forced to confront a new and unsettling reality: the emergence of malicious Generative AI, often embodied by ominous names like FraudGPT and WormGPT. These tools, hailing from the darker corners of the internet, present a unique challenge to the digital security landscape. In this blog post, we will explore the nature of Generative AI Fraud, dissect the messaging from those who productized it, and assess their potential impact on the cybersecurity landscape. While it's important to remain vigilant, we'll also emphasize that the situation, although concerning, is not yet cause for widespread panic.

What are FraudGPT and WormGPT?

FraudGPT is a subscription-based malicious Generative AI that employs sophisticated machine learning algorithms to create deceptive content. Unlike ethical AI models, FraudGPT knows no boundaries, making it a versatile tool for various nefarious purposes. It can craft spear phishing emails, fraudulent invoices, fake news articles, and more which can be used in cyber attacks, online scams, public opinion manipulation, and allegedly the creation of "undetectable malware and phishing campaigns".

WormGPT, on the other hand, is FraudGPT's sibling in the dark realms of AI. Developed as an unsanctioned counterpart to OpenAI's ChatGPT, WormGPT lacks ethical safeguards and can respond to queries related to hacking and other illegal activities. While its capabilities are limited compared to the latest AI models, it represents a stark example of the evolution of malicious Generative AI.

Posturing from the GPT Villains

The creators and purveyors of FraudGPT and WormGPT have not hesitated to advertise their wares. These AI-based tools are marketed as "cyber-attacker starter kits," providing a range of attack resources. They are offered for subscription with affordable pricing, furthering the accessibility of advanced tools for upstart cybercriminals.

Upon deeper investigation, the tools themselves don't appear to do much beyond what a cybercriminal could leverage from existing generative-AI tools with request query workarounds. The reason for this could be attributed to the older model architecture and the training data used. The creator of WormGPT claims to have built their model using a diverse range of data sources, with a focus on malware-related data. However, they have not disclosed the specific datasets used.

Similarly, the marketing surrounding FraudGPT does little to inspire confidence in the performance of the LLM. On dark web forums, the creator of FraudGPT describes it as cutting-edge, boasting that the LLM can create "undetectable malware" and identify websites vulnerable to credit card fraud. However, beyond the mention of it being a variant of GPT-3, the creator provides limited information about the architecture of the LLM and no evidence of an undetectable malware, leaving much to speculation.

How Malicious Actors Will Harness GPT Tools

The inevitable use of GPT-based tools like FraudGPT and WormGPT is still a real concern. These AI systems have the ability to generate convincing content, making them attractive for activities like crafting phishing emails, pressuring victims into fraudulent schemes, or even generating malware. Of course, there are security tools and countermeasures to address these new breeds of attacks, but the challenge continues to raise in difficulty.

Some potential use cases of Gen-AI tools for fraud include:

Enhanced Phishing Campaigns:

These tools can automate the creation of super personalized phishing emails (spear phishing), in multiple languages, increasing the chances of success. However, their effectiveness in evading detection by advanced email security tools and vigilant recipients remains questionable.

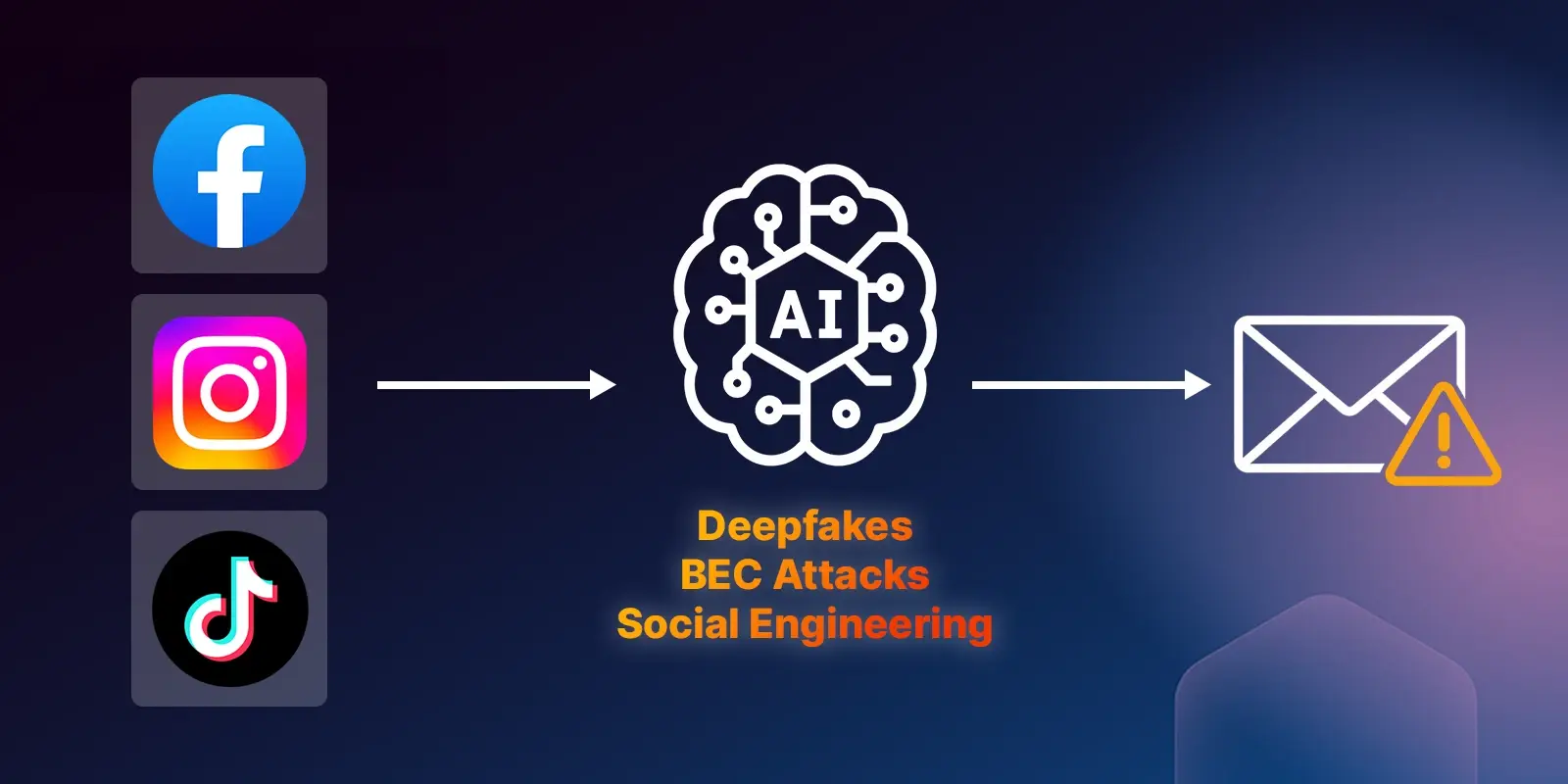

Accelerated Open Source Intelligence (OSINT) Gathering:

Attackers can expedite the research phase of their operations by using these tools to gather information about targets, such as personal details, preferences, and behaviors in addition to detailed company information.

Automated Malware Generation:

Generative AI has the concerning capability to generate malicious code, streamlining the creation of malware...even for individuals with limited technical expertise. However, while these tools can generate code, their output may still be basic and require additional steps for successful cyberattacks.

Weaponized Gen-AI Impact on the Threat Landscape

The emergence of FraudGPT, WormGPT, and similar malicious Generative AI tools undoubtedly raises concerns in the cybersecurity community. There is potential for more sophisticated phishing campaigns and a proliferation of generic malware. Cybercriminals may leverage these tools to lower the entry barrier into cybercrime, attracting individuals with limited technical expertise.

It's important not to succumb to alarmism in the face of these emerging threats. FraudGPT and WormGPT, while intriguing, aren't game-changers in the world of cybercrime, yet. Their limitations, lack of sophistication, and the fact that the most advanced AI models are not being harnessed in these tools make them far from invincible to more sophisticated AI-powered tools like IRONSCALES that can automatically detect AI-generated spear-phishing attacks. While the actual effectiveness of FraudGPT and WormGPT remains unverified, it is important to note that social engineering and targeted spear phishing have already proven to be successful tactics. These malicious AI tools, however, provide cybercriminals with greater accessibility and ease in crafting such phishing campaigns.

As these tools continue to evolve and gain popularity, organizations must brace themselves for an onslaught of highly targeted and personalized attacks on their employees.

Don't Fear Today, but Prepare for Tomorrow

The emergence of Generative AI fraud, as demonstrated by tools such as FraudGPT and WormGPT, is a cause for concern in the cybersecurity landscape. However, it is not surprising, and security solution providers have been diligently working to address this issue. While these tools present more difficult challenges, they are far from unbeatable, and the criminal underground is still in the early stages of their adoption while security vendors have been at this for a long time. There already exists AI-powered security tools, like IRONSCALES, to combat AI-generated email threats with great effectiveness.

To stay ahead of the evolving threat landscape, organizations should invest in an advanced email security solution that provides:

- Real-time advanced threat protection with specific capabilities to defend against social engineering attacks like BEC, impersonation, and invoice fraud.

- Automated spear-phishing simulation testing to empower end users with personalized employee training.

Additionally, it is important to remain informed about the developments in Generative AI and the tactics employed by malicious actors with these technologies. While there's no need for panic today, preparedness and vigilance are the keys to mitigating the potential risks posed by adoption of Generative AI for cybercrime.

Explore More Articles

Say goodbye to Phishing, BEC, and QR code attacks. Our Adaptive AI automatically learns and evolves to keep your employees safe from email attacks.

/Concentrix%20Case%20Study.webp?width=568&height=326&name=Concentrix%20Case%20Study.webp)

.webp?width=100&height=100&name=PXL_20220517_081122781%20(1).webp)